Minecraft uses a single-scale voxel engine, with 1m blocks. The lack of sub-division prevents easily modifying smaller and larger scales.

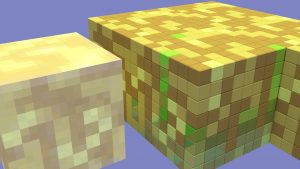

Instead of considering block textures to be immutable and cosmetic, it could be a  rendered representation of a projection of the sub-blocks that compose the block itself. In this way, we could see the classic Minecraft grass and dirt blocks as a single level of nesting in a multi-level system of detail. Because all Minecraft blocks share a texture size (16×16 by default), this isn’t a particularly difficult stretch of the imagination. Minecraft blocks, too, exist in 16×16 chunks, each of which carries some data about biomes, heightmaps, and so forth. The problem here is that all of the user-level and simulation-level operations act on a block level. None of them operate on the textures or the chunks.

rendered representation of a projection of the sub-blocks that compose the block itself. In this way, we could see the classic Minecraft grass and dirt blocks as a single level of nesting in a multi-level system of detail. Because all Minecraft blocks share a texture size (16×16 by default), this isn’t a particularly difficult stretch of the imagination. Minecraft blocks, too, exist in 16×16 chunks, each of which carries some data about biomes, heightmaps, and so forth. The problem here is that all of the user-level and simulation-level operations act on a block level. None of them operate on the textures or the chunks.

So, if Minecraft uses 16×16, is that the best scale factor? Before making a nested block detail system, we really should ask ourselves

What scale factor?

I propose a 8x scale system where each level is eight times larger than the one before. If we use a total of 16 levels, and realistic object sizes, we get a span from sand to stars and beyond, with each scale representing the scale of something tangible and intuitive.

- 0.4mm (sand)

- 3mm (seed)

- 2.5cm (haft)

- 20cm (handspan)

- 1.6m (the basis of the entire proposed system, the height and armspan of an average person)

- 12.8m (house)

- 100m (city block)

- 0.82km (neighborhood)

- 6.5km (town)

- 52km (county)

- 420km (state)

- 3300km (continent, moon)

- 27Mm (rocky planet)

- 215Mm (gas planet)

- 1.7Gm (star)

- 14Gm (stellar structures (ringworld, dyson sphere))

Of course other mappings could be applied. A more cartoony world may have the scales compressed, with planets and stars sharing scale with towns. A hyper-real world could have many intermediate steps, with people the size of rabbits, and trees the size of planets. But why go through all this trouble in the first place?

Why nested scales?

Nested blocks allow four important advantages over a flat scale representation (such as we have in Minecraft)

- Rapid low-cost procedural generation

- Powerful and dynamic GI lighting system

- In-game tileset modification

- Dynamic filtered LOD rendering.

Low Cost Procedural Generation

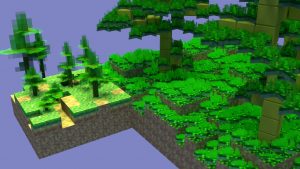

Instead of generating all of the branches for all of the trees in sight, you can generate a few trees, bake them into x-plane textures, and then place those in the world at various scales and rotations. If the player gets close to them, you can generate the voxels at that point, but every likelyhood is that they never will. This on-demand procedural generation allows for rapid population of very large areas.

The nested voxel scales also allows for simplified simulation when zoomed out or zoomed in. This ties into the multi-timescale features, which I’ll reserve for another post. Suffice to say, simulation of fluids and societies becomes much more manageable in the context of an adaptive scale framework.

Dynamic Global Illumination

Minecraft  does a kind of poor-man’s global illumination with the light propagation system. However, since it is tied to a single scale, it can’t afford to have light propagate very far. With a nested scale system, brighter light levels could fall-off only at larger scales, allowing for dynamically placed lights with rapid low-cost propagation. If the light takes on the color of the blocks that it propagates past, the lighting system will have a global illumination quality as well. This could be combined with a more conventional shadow-casting system for potentially spectacular results.

does a kind of poor-man’s global illumination with the light propagation system. However, since it is tied to a single scale, it can’t afford to have light propagate very far. With a nested scale system, brighter light levels could fall-off only at larger scales, allowing for dynamically placed lights with rapid low-cost propagation. If the light takes on the color of the blocks that it propagates past, the lighting system will have a global illumination quality as well. This could be combined with a more conventional shadow-casting system for potentially spectacular results.

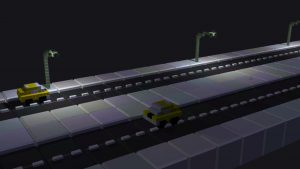

For example, a street-light could be lighting a house-scale road (size 6) using human scale light falloff (size 5) and be modeled with hand-span scale blocks (size 4). Something like so.

In-game tile set modification

If you want to change the tile-set in Minecraft, you alter the textures outside the game, and that’s it. No effect on gameplay. But if textures are merely low-detail representations of real in-game voxel data, then one could, at any time, edit the voxel contents of the block and update the block texture.

This also allows for players making their own block types by building them in-game. These blocks could be entire buildings or planets, which other players could then import and easily use in their worlds.

dynamic Level of Detail filtering

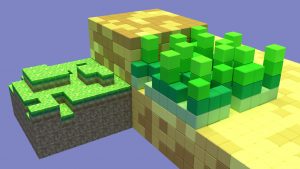

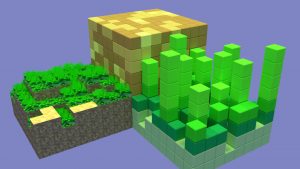

Each of the large blocks in the background represent 512x512x512 of the small blocks in the foreground. For comparison, the Minecraft map height is locked at 256 blocks.

Because the game has data on both an abstracted form for blocks (a textured cube), and their contents (lots of smaller cubes), it could smoothly fade between the two as the player switches between interacting at different scales, or changes their viewpoint.

Level of Detail is a normal thing for games to manage, but many times the level of detail is designed around maintaining photo-realism. But if the engine implements a nested scale system with tagged features (minerals, plants, and animals, for instance) it would be fairly easy to expose tag-specific LOD controls, thereby allowing the player to prioritize viewing features that they care about. Roads and player created structures will probably be much more important to a traveler than they would be to a farmer, who will likely want more detail on plants and soil conditions.

Still want to go back to a single grid size?

I admit that implementing a nested voxel scale engine is much more daunting than a single-scale system. Hopefully I’ve made it clear that the benefits outweigh the inevitable difficulty.

And, in the end, some sort of large-scale data-management is always going to be necessary. Grouping connected features together, and generating in-world elements is a constant headache in Minecraft because of how much data is thrown away after feature generation. Why not build the nested hierarchy right into the engine?